Taking a Nap

Personal Blog about anything - mostly programming, cooking and random thoughts

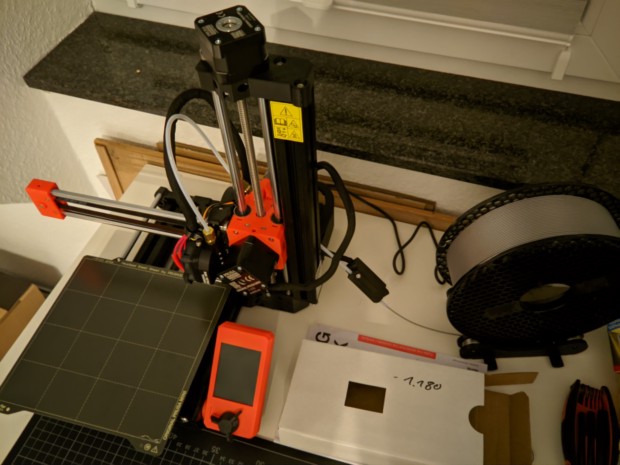

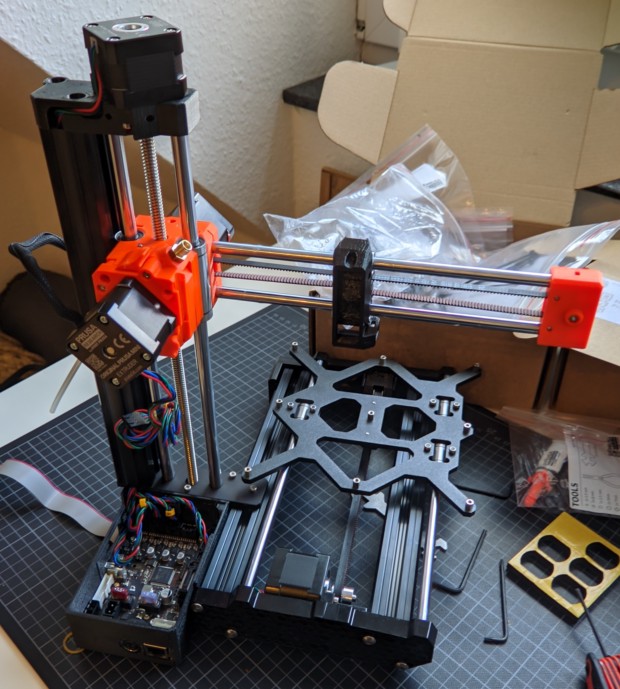

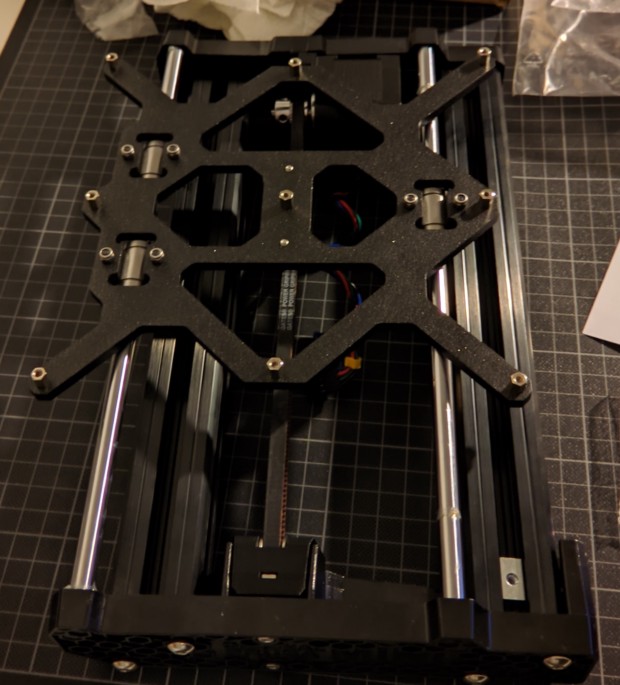

After finishing my 3D printer and running the first test print, I wanted to make a case for a temperature and humidity sensor. I use the ESP8266, a small microcontroller with WiFi capabilities, and the DHT22 as the temperature and humidity sensor.

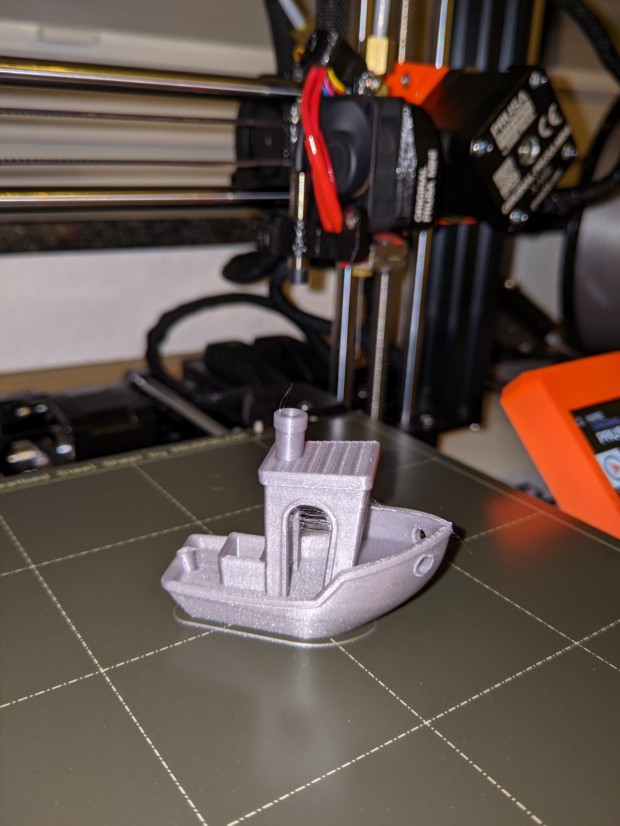

I started by building small test print using Blender, to test if the ESP8266 could be mounted on small pins. Luckily I took this step, as I mismeasured the spacing of the pins, which could be easily fixed at this stage. Additionally the print showed that my printer was stringing a lot, which I fixed by changing the retraction settings.

The next step was to design the case. For this I simply added 1mm thick walls around the mounting. Using boolean functions I've added holes for the USB port and the reset button. Additionally I've added a lid to the case, which was simply placed on a 0.5mm lip in the case.

The design revealed some issues, which I fixed in the next iteration. The holes in the lid were too small and the printer was having trouble printing them. The USB port cutout had to be moved and increased slightly. Additionally the box was to small to fit the ESP8266 and the DHT22 and I added 1cm to the height of the box. These changes led to the second design.

I used this design for a day, which revealed another issue. The sensor was reporting too high temperatures, by about 3 degrees Celsius. This was caused by the fact that the DHT22 sensor was too close to the ESP8266. I had to separate the two components by increasing the size of the box and adding a separation wall.

As I had to redesign the case anyway, I also redesigned the lid. I changed the design of the holes from cubes to octagons. The lid is now held in place by protusions which fit into holes in the case. I added additional holes to the case, where the DHT22 sensor would be located.

The case still has some potential for improvement, but I'm happy with the current design for now.

The files for the case can be found below and can be used under the CC BY-SA 4.0 license.

This was the first 3D print I did, to test the printed. The result is great, stringing being the only issue. I will now try out different retraction settings to reduce stringing.

I baked pizza for dinner. My daughter got her own piece with tomatoes.

Das Rezept basiert auf dem Dinkelvollkornbrot mit Buttermilch von Plötzblog und ist eine vegane Anpassung. Die Saaten können variiert werden, benutzt einfach was ihr da habt und mögt.

Vorteig und Saaten mindestens 2 Stunden ruhen lassen.

Hauptteig per Hand kneten bis homogen.

Hauptteig 2 Stunden gehen lassen und währenddessen 3 mal falten

In Sonnenblumkerne rollen und in gefettete Backform geben.

Mindestens 12 Stunden in Kühlschrank gehen lassen, Volumen sollte sich etwas verdoppeln. Ansonsten vor dem backen bei Raumtemperatur weiter gehen lassen.

Vor dem Backen befeuchten und bei 180°C Ober-/Unterhitze für 90 Minuten backen.

Anschließend 10 Minuten ohne Form backen.

Alles bei 190° für 30-40 Minuten backen.