[Helsinki] Serenity

Personal Blog about anything - mostly programming, cooking and random thoughts

I'm currently helping to evaluating a large market research survey, which ueses Likert Scales. To visualize the data I've tried several plots. The plots below where created with artificially created data to expose the strengths and weaknesses of different plot types.

Data distribution:

You can find the code for all plots here!

Extension of first plot.

I've recently run into a paradoxical situation while training a network to distinguish between to classes.

I've used cross entropy as my loss of choice. On my training set the loss steadily decreased while the F1-Score improved. On the validation set the loss decreased shortly before increasing and leveling off around ~2, normally a clear sign for overfitting. However the F1-Score on the validation set kept rising and reached ~0.92, with similarly high presicion and recall.

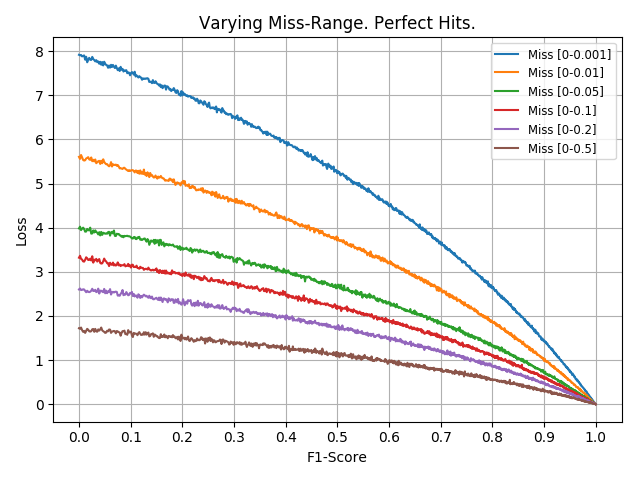

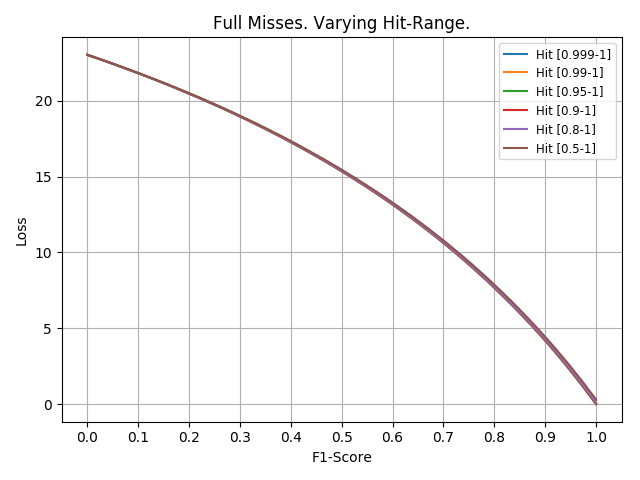

As I never took a closer look at the relation between F1-Score and the cross-entropy loss, I've decided to do a quick simulation and plotted the results. The plots show the cross-entropy in relation to the F1-Score. On the first graph I varied the range in which the misses landed, while hits where always perfect. The graph shows that misses with a _confidence _create higher losses, even at high F1-Scores. In contrast the confidence of hits has a negligible influence on the loss as depicted in the second graph.

It seems likely that my network developed a high confidence in its prediction, only answering **** or 1, which provoked a high loss while still achieving reasonable high accuracy.

Ein einfaches Rezept für ein Kastenbrot aus Weizenmehl. Die Zusammensetzung der Saaten kann beliebig angepasst werden.

Zutaten des Vorteigs gut vermengen und 12 Stunden bei Raumtemperatur gehen lassen

Saaten mischen und mit heißem Wasser übergießen. Mit eine Klarsichtfolie abdecken und ca. 1 Stunde ziehen lassen.

Alle Zutaten des Hauptteigs vermengen und per Hand zu einem weichen, leicht klebrigen Teig kneten.

Teig 90 Minuten gehen lassen und alle 30 Minuten in der Schüssel falten.

Teig auf einer bemehlten Arbeitsfläche formen und direkt in die Kastenform geben.

Für weitere 2 Stunden gehen lassen, bis sich das Volumen des Teigs etwa verdoppelt hat.

Vor dem backen den Brotteig längs einschneiden und gut befeuchten (z.B. mit einer Sprühflasche)

Den Ofen auf 250° C vorheizen und das Brot für 10 Minuten backen. Danach die Temperatur auf 200° C reduzieren und für weitere 35 Minuten backen. Das Brot aus dem Kasten nehmen und für weitere 10 Minuten auf einem Rost backen.

I transferred the blog to a new server. Hopefully everything still works.

Update:

https://pathfinder.libove.org running on new server

https://tools.libove.org running on new server